Neural networks are at the core of many technologies we use every day, from digital assistants to medical imaging tools. Inspired by how the human brain works, they are designed to process information, recognize patterns, and learn from data. At their heart, they consist of layers of nodes that pass signals forward, adjusting their internal weights with experience. This simple idea has fueled a huge range of applications, making neural networks one of the most studied areas in artificial intelligence today.

What Is a Neural Network?

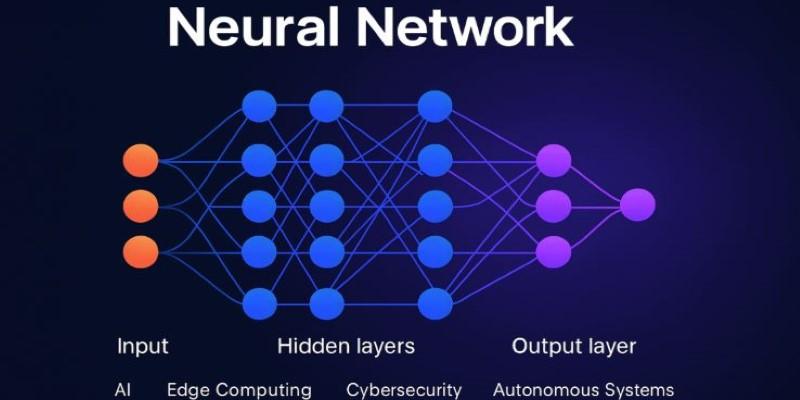

A neural network is a model built from layers of interconnected nodes, often called neurons. Each connection has a weight that changes as the system learns, which allows it to adapt and improve predictions over time. These models belong to the larger field of machine learning and, when deep and layered, fall under deep learning.

The process begins with input data moving through hidden layers, where transformations occur. The final output reflects what the network has learned so far. Training relies on backpropagation, a feedback method that reduces error by adjusting weights. This ability to learn complex, non-linear relationships makes neural networks far more capable than many traditional algorithms, particularly for tasks such as recognizing images, classifying text, or predicting outcomes from messy datasets.

Types of Neural Networks

Feedforward Neural Networks (FNN)

Feedforward neural networks are the most basic structure. Information flows in one direction only—from the input layer, through hidden layers, to the output layer. No cycles exist, and each layer connects fully to the next.

This simplicity makes them effective for classification and regression tasks where patterns are straightforward, such as detecting spam emails or predicting house prices. While they don’t handle context or sequential data, their clear design and efficiency make them a foundation for many other network types.

Convolutional Neural Networks (CNN)

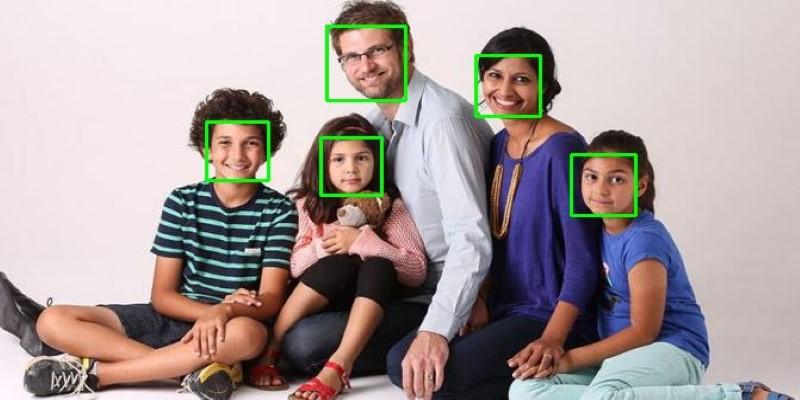

Convolutional neural networks are best known for processing visual information. Instead of every neuron connecting to every other, they use filters that scan input data, detecting features like lines, edges, and textures.

By stacking convolutional and pooling layers, CNNs reduce complexity while preserving important details. At the final stage, fully connected layers help classify what the network has seen. These qualities make CNNs the standard for image recognition, video analysis, and medical imaging. They are also used in self-driving cars to interpret traffic signs, pedestrians, and road conditions.

Recurrent Neural Networks (RNN)

Recurrent neural networks bring memory into play by looping outputs back into the model. This feedback allows them to capture context, which is vital for working with sequences such as text, speech, or time-based data.

An RNN remembers past information while processing new input, making it powerful for language modeling, caption generation, or predicting stock movements. But traditional RNNs struggle with long-term memory, as signals fade over time. This shortcoming inspired more advanced designs like LSTMs and GRUs.

Long Short-Term Memory (LSTM) Networks

LSTMs were created to address the memory loss in RNNs. They include cells with gates that control what information should be stored, updated, or forgotten. This makes them highly effective for learning long-term dependencies in data.

They are widely used in translation systems, text generation, and speech recognition. LSTMs can generate human-like text by learning sentence structure and context, making them the backbone of many natural language systems. Their ability to retain and manage information across long sequences marked a breakthrough in sequence modeling.

Gated Recurrent Unit (GRU) Networks

GRUs simplify the LSTM design by combining gates and removing some complexity while keeping the model’s ability to remember sequences. This makes them faster to train and less resource-heavy.

GRUs often match LSTMs in performance and are especially helpful when working with limited computing power. They are frequently applied in mobile technologies and real-time applications, where efficiency matters as much as accuracy.

Generative Adversarial Networks (GAN)

Generative adversarial networks introduce a unique setup with two models working against each other. A generator creates synthetic data, while a discriminator judges whether the data is real or fake. The constant competition improves both parties over time.

GANs are capable of producing images, music, and video content that can be difficult to distinguish from real examples. They have been used in creative industries, from designing fashion to generating artwork, and in practical fields like producing training data when real datasets are scarce.

Radial Basis Function (RBF) Networks

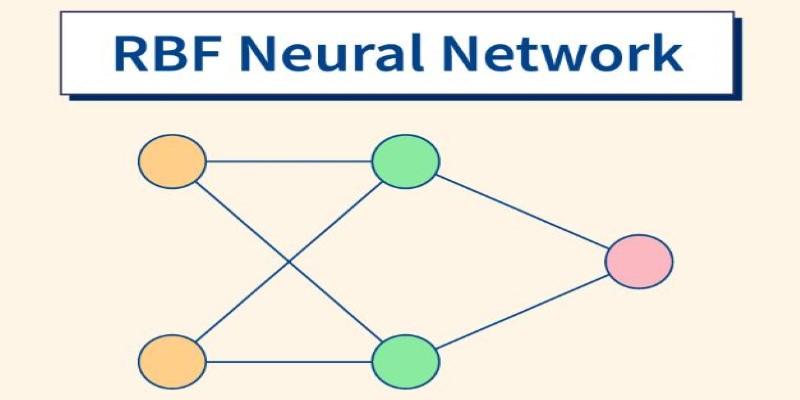

RBF networks are built on radial basis functions, which measure distances between data points and reference centers. Instead of layering connections in the same way as other networks, they focus on comparing similarity.

This makes them effective for classification and regression tasks, especially when the dataset is relatively small. While they can provide strong generalization, performance slows as datasets grow larger, limiting their use in modern large-scale applications.

Modular Neural Networks

Modular neural networks take a divide-and-conquer approach. Rather than one large network handling all data, multiple smaller networks (modules) process separate parts of a task. The outputs are then combined to form the final decision.

This setup reduces complexity, avoids overfitting, and can increase accuracy. It’s particularly useful for financial forecasting, multi-sensor data processing, or other situations where diverse inputs need specialized handling. By assigning different responsibilities to different modules, these networks can scale more effectively.

Conclusion

Neural networks are a digital reflection of how the human brain solves problems—processing information, identifying patterns, and adapting over time. Each type of neural network serves a different purpose. Feedforward networks handle simple classification, CNNs excel in visual recognition, RNNs and their variations manage sequences, GANs create new content, and modular designs break down complex problems. Together, these approaches show how flexible and adaptable the field has become. As research continues, neural networks will grow more capable, shaping the future of technology across industries, daily life, communication, healthcare, education, and creative innovation.