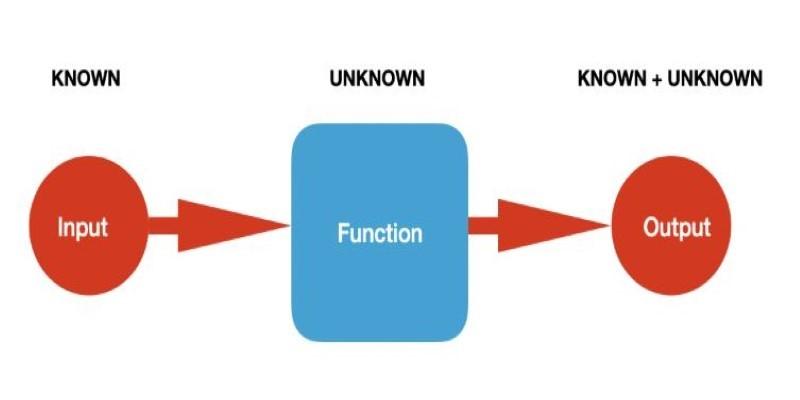

Computer vision projects progress at a rapid pace, and experiments proliferate even more quickly. You tweak learning rates, toggle data augmentations, swap model backbones, and soon your folder becomes cluttered with experiment runs labeled haphazardly—names like “try_new_aug_final2” are all too common. MLflow cuts through this chaos by introducing structure: it logs your parameter changes, the metrics you tracked, and the files you generated—then unifies all this information in a centralized location.

The Tracking component records parameters, metrics, tags, and artifacts, while the Model Registry keeps model versions with stages like Staging or Production. With a few steady habits, MLflow turns your training loop into a tidy, searchable record you can understand at a glance.

Set Up Tracking And The UI

Create a fresh environment so library versions stay stable. Install MLflow along with your usual deep learning stack. Point the tracking URI to a local store to begin, then keep the UI open during training so you can watch runs land. Many teams start with a simple sqlite backend and later migrate to a managed database and a storage bucket for artifacts without changing their training script.

Log Parameters That Describe Each Run

Open a run context and log the details that define the experiment. Record dataset name and split, backbone, image size, optimizer, scheduler, batch size, and seed. Add tags for quick filters, such as cv task set to classification, detection, or segmentation. Save the git commit so the results link back to the code. Small, consistent parameter logs make comparisons painless and stop future you from guessing which settings produced a good curve.

Record Metrics That Tell The Story

Metrics are the heartbeat of the run. For classification, track loss and accuracy per epoch, plus top k accuracy if you need it. For detection, add mean average precision and class level scores. For segmentation, log IoU and Dice. Keep an eye on learning rate, too, since schedule issues often show up there first. Log at epoch boundaries for long runs to keep the UI readable. If you need per step logging, throttle it so plots remain clear rather than noisy.

Store Artifacts That Make Results Visible

Artifacts complete the picture. Save the best model weights by validation score instead of only the final epoch. Keep a copy of the config file and the label map. Add a handful of prediction images with overlays such as boxes or masks, so you can see successes and misses without digging. Include a confusion matrix or PR curves and a short markdown summary that says what changed and why you tried it. Use thumbnails or compressed images so storage does not balloon.

Capture Environments For Reproducibility

Good results should train again next week. Log a pip freeze or conda environment file, plus CUDA and driver versions in tags. Note the GPU type and memory. Record seeds and any deterministic flags used by your framework. If your pipeline applies on the fly augmentations, log the augmentation recipe as parameters so the data path can be repeated. These details feel routine, yet they turn one lucky run into a dependable recipe.

Name Experiments And Runs Clearly

Pick experiment names that reflect the task and dataset. Use run names that describe the main idea you are testing, not cryptic code. Add a one line description, such as switched to cosine schedule with warmup. When the list grows long, that sentence saves real time because the intent is visible without opening artifacts.

Compare Runs And Read What Images Reveal

The compare view shines when scores look close. Select candidates and plot validation curves side by side. If metrics tie, inspect artifacts. Browse class level results for imbalance, and scan prediction images for missed small objects, over smoothed masks, or odd false positives. Numbers tell you who wins, while images hint at why. Treat both as part of the same decision.

Register Models And Manage Versions

When a model meets your bar, register it. Assign a version, attach the metrics you trust, and move it to Staging. If you retrain with a small change, create a new version instead of overwriting files. The registry then reads like a timeline rather than a pile of folders. Promotion to Production becomes a calm step backed by the history already captured in MLflow.

Avoid Frequent Pitfalls In Vision Tracking

Silent data drift breaks fair comparisons, so log a data manifest hash or a small sample of file paths. Over logging can slow training, so prefer epoch level metrics for long schedules and keep image artifacts light. Seed control helps with repeatability, but still plan a few independent runs when claims matter. Finally, log base learning rate and batch size, even when a scheduler adapts them, since those anchors explain much of the training behavior.

Scale Up The Tracking Server And Storage

As experiments grow, move the backend store and artifacts out of your laptop. Point MLflow at a managed database, place artifacts in a durable bucket, and set the tracking URI with an environment variable so scripts remain portable. Use experiment IDs in code to avoid name collisions. A modest bit of ops work here pays back with reliability and speed.

Keep The House In Order

Archive dead experiments to reduce clutter. Hide noisy test runs. Prune artifacts that no one reads after a review cycle. A light lint check in your project can verify that key parameters such as lr and weight decay were logged on every run. If the UI starts to feel crowded, create sub experiments for major forks instead of tossing every run into one bucket. Clean structure today saves detective work tomorrow.

Conclusion

Tracking computer vision experiments with MLflow is about habits, not heroics. Log parameters that describe the setup, record metrics that show progress, and store artifacts that make differences visible. Capture the environment so results repeat, name runs clearly so intent is obvious, and use comparisons to balance what the scores say with what the images show.

Register strong models and let versions tell the story of how they improved. Keep the system tidy as the project grows. With this rhythm in place, your experiment history reads like a clear narrative you can trust and explain.