Prototyping, in particular, is one of the most important procedures in creating effective machine learning models when large optimization strategies like gradient descent are used. Model experimentation, validation, and refinement. Construction of prototyping is a technique that allows developers and data scientists to experiment, validate, and improve models rapidly, and start full-scale production. The gradient descent algorithm, by far, is the most popular algorithm, and it is the fundamental building block that one expects to utilize in the training of the models. As such, there is a robust interest in comprehending the most appropriate way to prototype it.

This paper discusses the fundamentals of prototyping gradient descent in machine learning and contains a detailed discussion on what beginners and experts should know about this process. It further describes the role of machine learning prototyping in accelerating innovation and how to best use fast prototyping machine learning methods.

The Concept Behind the Gradient Descent Algorithm

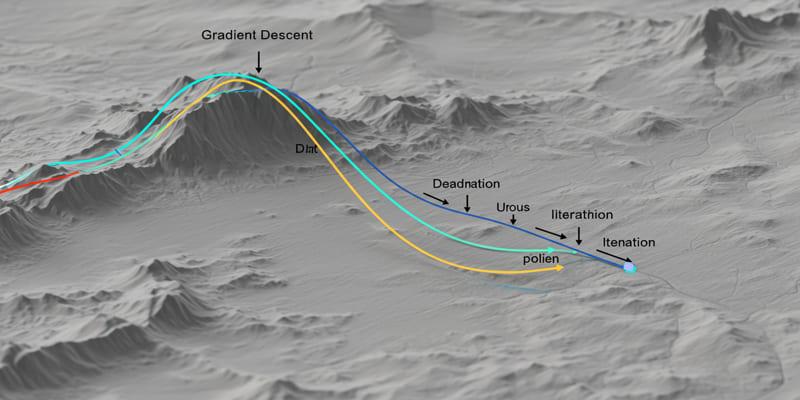

The gradient descent algorithm is an attractive iterative optimization technique to reduce the cost function in machine learning models by updating the parameters according to the steepest descent. This will increase the precision, given that it minimizes errors in prediction.

The cost function in machine learning quantifies the difference between the predicted values and actual values in machine learning. The aim of the gradient descent is to identify the model parameter set that optimizes this cost the most. This efficiency is essential to training linear regression, neural networks, and most other models, which need to minimize loss.

The initial thing that might be done in order to conceive a gradient descent algorithm first involves having a concise mathematical expression that should otherwise include a rate of learning (the velocity at which the model adapts parameters) and standardization standards (termination of sequence that stops the iteration). The variants of gradients that include batch, stochastic, and mini-batch provide adaptability with regard to speed and precision.

Significance of Prototyping Machine Learning

The process of developing, testing, and refining models or algorithms in a short time to test ideas and find functional solutions early is referred to as prototyping machine learning. This cyclical method considerably minimizes risks related to complete development cycles because errors and inefficiencies are detected at an early stage.

When used with gradient descent and other optimization algorithms, prototyping can allow practitioners to:

- Experiment with the new batch and learning rates.

- Experiment with new data preprocessing and feature engineering hypotheses.

- Note the rate at which parameters are tuned.

- Detect performance blocking points and code optimization.

The machine learning prototyping allows the creation of rapid loops of feedback and the improvement of the speed of innovation and decision-making, particularly with complex data or models.

Fast Prototyping Machine Learning Techniques for Gradient Descent

In order to successfully prototype the gradient descent algorithm, it is good to capitalize on fast prototyping machine learning algorithms that maximize time and resources:

Use Lightweight Frameworks and Tools

Such programming frameworks as PyTorch and TensorFlow Lightning offer a flexible API to experiment with gradient descent quickly. The tools make the implementation process easy with the built-in automatic differentiation and gradient computing capabilities. The use of such structures accelerates coding and debugging.

Start with Simplified Datasets

Smaller or synthetic datasets can be used to execute faster iterations and debug prototyping. It assists in testing the correctness of algorithms prior to application to real-life data. The practice reduces the utilization of computational resources and hastens experimentation.

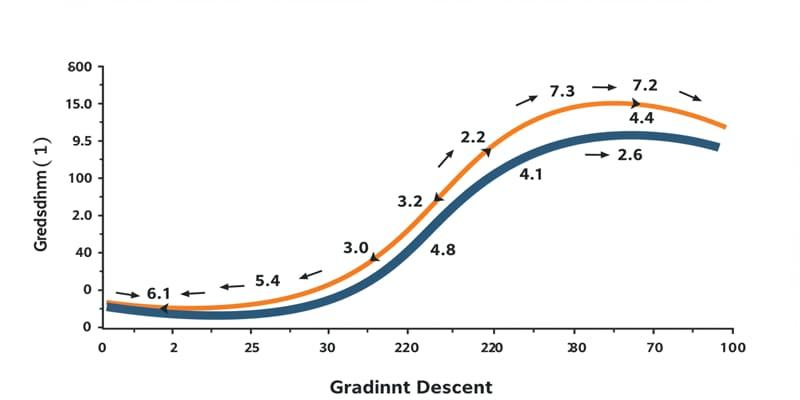

Imagine the Optimization Process

The visual representation of the cost function and parameter updates over gradient descent prototyping can provide insights into the dynamics of learning and convergence patterns, and use this information to guide parameter modification. Monitoring of training progression can be conducted in real time with the aid of tools such as Matplotlib or TensorBoard.

Automate Parameter Tuning

You can also use automation methods such as grid search or Bayesian optimization in exploring various hyperparameters effectively in the prototyping phase. Automated tuning reduces guesswork in finding optimal learning rates and batch sizes, and greatly enhances the prototyping cycle.

With these fast prototyping machine learning strategies, data scientists can optimize gradient descent implementations to get improved model performance with fewer trials and errors.

Issues and Practices in Practicing Prototyping Gradient Descent

Although it is very helpful to prototype the gradient descent algorithm, it is also fraught with some difficulties:

- Selection of Learning rate: An Incorrect learning rate may lead to slow convergence or overshooting of the minimum. Close experimentation on speed stability is required.

- Working with Large Datasets: Full batch gradient descent may be very computationally intensive with large datasets. The prototyping approach to this problem is to use stochastic or mini-batch approaches to cut down on memory usage and accelerate computations.

- Local Minima avoidance: Gradient descent may fall into local minima. The addition of more complicated variants like momentum, AdaGrad, or Adam optimizers can traverse these traps well.

- Overfitting and Underfitting: Early prototyping with validation datasets guarantees that the model is predictable well. Cross-validation setups should be part of prototype phases.

Good practices in machine learning prototyping are to document experiments, control code, and have reproducible workflows. The use of strong validation methods in prototyping guarantees that the optimized gradient descent model has great generalization power to data that are not seen.

Advanced Practices on Prototyping Gradient Descent

Due to the increasing complexity of machine learning systems, prototyping gradient descent must be considered further as a follow-up to innovation:

- Integration with MLOps: Prototypes are to be developed with a view to eventual deployment. Standardized code, modular design, and compatibility with MLOps platforms to support continuous integration and monitoring are essential to efficient handoff to production teams.

- User-Centric Prototyping: Incorporate stakeholders and end users during the early prototyping stages in order to match model functionality with business objectives. Real user feedback may reveal other requirements and enhance adoption.

- Ethical and Privacy: Prototype models have to comply with the data privacy rules and include fairness checks to prevent biases. Unintended consequences can be detected at an early stage.

- Exploiting AI Tools: Automate some aspects of the prototyping workflow with AI-based tools, e.g., feature selection and hyperparameter optimization. These tools accelerate iterations and improve the quality of the model.

Through such advanced considerations, organizations are able to make sure that their prototyping work will be able to produce not only functional models but also scalable and responsible machine learning solutions.

Conclusion

Machine learning: Prototyping gradient descent in machine learning is an essential part of constructing effective and precise models. Practitioners can speed up the development process by following the principles of prototyping machine learning and utilizing the gradient descent algorithm in the most appropriate way possible, which will enhance the robustness of the models. The use of machine learning prototyping and machine learning fast prototyping methods would guarantee high-speed iteration, experimentation, and validation, which eventually produces high-quality machine learning solutions.