AI is now involved in everything from medical diagnostics to loan approvals. But one question keeps resurfacing: can we really understand why it makes certain decisions? Explainable AI (XAI) tries to answer that. It aims to make AI models more transparent so users and regulators can make sense of their outputs. But that’s easier said than done. While the need for XAI feels obvious, the road to making it work is full of limitations, compromises, and shifting definitions. So the question remains: Is Explainable AI built to last?

What Explainable AI Promises—and Where It Stalls

Explainable AI is meant to make machine learning decisions easier to understand. The goal is simple: help people see why a model made a certain choice. This matters most when the stakes are high—diagnosing illness, approving loans, or screening job applicants. Tools like LIME, SHAP, and attention maps try to pinpoint which parts of the input had the biggest impact on the outcome.

But these tools often raise as many questions as they answer. They highlight influential factors, but that doesn’t always translate into clear reasoning. Worse, different explanation methods can disagree on what mattered most. That leaves people wondering which version—if any—they should trust.

Instead of opening the black box, these tools sometimes just put a window on it. They offer summaries, not full explanations. For a data scientist, that might be enough. But for someone trying to understand a denied claim or a flagged test result, it’s not.

And explanations aren’t one-size-fits-all. What feels helpful to a developer might confuse a doctor or a policy analyst. That’s part of why XAI, while promising in theory, remains tough to deliver in practice.

The Tradeoff Between Accuracy and Interpretability

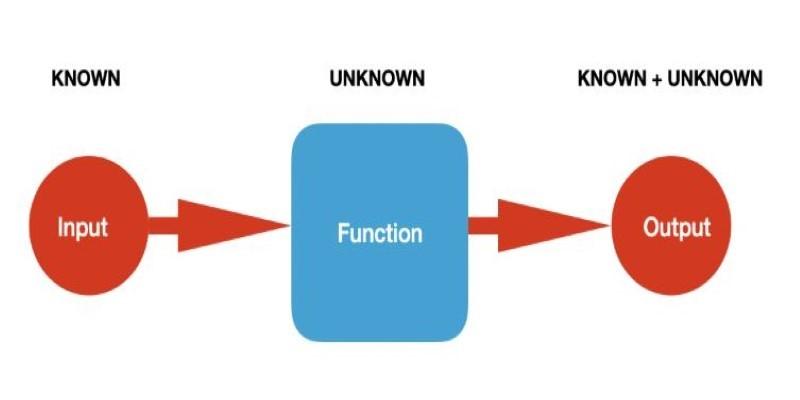

One of the main challenges of Explainable AI is the tradeoff between transparency and performance. Simpler models, such as decision trees, are easy to interpret, but they often struggle with complex data. In contrast, deep neural networks can detect patterns and make predictions with high accuracy—but they don't explain themselves well.

In practice, this leads to compromise. In safety-critical environments, using a high-performing black-box model without interpretability may not be acceptable. Yet using a simpler model for the sake of clarity might lead to missed diagnoses, flawed predictions, or unfair results.

Attempts to build models that are both interpretable and powerful are still in progress. Some research suggests that certain types of models can achieve both, but results are often domain-specific. For many applications, trying to “bolt on” explanations after the fact only creates confusion, especially when post hoc explanations don’t reflect the actual logic of the model.

This tradeoff limits where and how Explainable AI can be used effectively. If the explanation is too abstract, it won’t build trust. If it’s too detailed, it might confuse non-experts. And if it contradicts itself—or the actual model—it can do more harm than good.

Legal Pressure and Human Trust: The Social Weight of XAI

Explainable AI is not just a technical challenge. It's being driven by outside forces, especially policy. Regulatory bodies, particularly in the EU, are pressing for AI systems to provide reasons for their decisions. The idea that people should have a “right to explanation” is gaining ground, especially where automated decisions affect livelihoods.

This legal shift changes the incentives for companies. Transparency becomes not just a design choice, but a requirement. In this context, XAI tools are being used to help businesses meet compliance standards, not just improve product design or performance.

Beyond regulation, there’s the human side. People are more likely to accept and use AI when they understand how it works. If someone receives a medical prediction or a loan denial, they expect a clear reason. Trust is not built by accuracy alone—it’s shaped by communication. And AI that can’t explain itself may fail to earn that trust.

This applies to professional settings, too. A doctor might ignore a system that gives accurate results if the reasoning behind them isn't clear. In the same way, a legal expert or risk analyst is less likely to rely on AI if they can't audit its logic. In these fields, explainability isn't just nice to have—it's a necessary condition for adoption.

Explainable AI, when done well, supports collaboration between humans and machines. It helps people spot errors, challenge assumptions, and learn from the system. Without it, AI feels more like a verdict than a tool.

The Road Ahead: Will Explainable AI Survive?

The future of Explainable AI depends on how its role evolves. If we expect it to make every black-box model fully transparent, we may be disappointed. Complex models are difficult to interpret by nature, and some explanation methods are little more than educated guesses.

But XAI doesn’t need to do everything. It just needs to provide enough understanding to build confidence in how models work. That might mean designing models that are simpler but good enough for their tasks, or offering layered explanations that cater to different users—technical, legal, and everyday.

There’s growing interest in developing models that are transparent by design, not just through post-processing. These models won’t replace black-box systems entirely, but they could serve areas where interpretability matters more than raw performance.

Another promising path is improving how explanations are delivered. User-friendly formats—like text summaries or visual diagrams—can help bridge the gap between raw model logic and human reasoning. When people can test, question, and explore model outputs themselves, they tend to trust the system more—even if they don’t understand every detail.

Explainable AI is evolving. While it may not provide perfect clarity, it can offer enough insight to make AI more useful, safe, and trustworthy. That’s where its long-term value may lie.

Conclusion

Explainable AI responds to real demands for clarity, fairness, and accountability. As AI becomes more influential in health, finance, and public decisions, the need to understand its reasoning grows. Legal requirements and public expectations are pushing for more transparency. While explainability tools aren't perfect, they serve a growing purpose. As long as AI impacts people's lives, the need to make sense of its choices won't go away.