The human brain, with its 86 billion interconnected neurons, processes information and learns with astonishing complexity. Surprisingly, similar principles are now demonstrated by large language models (LLMs). Recent advances in AI indicate that with the support of AI, organizations have begun to resemble those of a biological intelligence, only through alternative means. Understanding this convergence provides crucial insights into the evolution of AI, offering a roadmap for more robust systems and a deeper understanding of intelligence itself.

Neural Networks Mirror Biological Architecture

The neurons in biology communicate either through electrical impulses or chemical signals, and they form complex networks that store and process information. The neuron sends signals downstream so that, upon receiving appropriate stimulation from its neighbors, the neuron fires, thus affecting other neurons. It is repeated millions of times in a billion connections that form the rich patterns of activity which we know as thought, memory, and consciousness.

Artificial neurons in layers modeled on a class of artificial neurons are used by LLLMs. Every artificial neuron takes weighted inputs from past layers, processes them, and obtains results that match the mathematical functions in the artificial neuron, then forwards the results to the successive layers. Although the technology behind the two systems differs, in one, electrical impulses play the role of intelligence, whereas in the other, they are performed in dedicated networks of small, simple units through a process called distributed processing.

There are attention mechanisms in the transformer structure that drive contemporary LLMs, encapsulating how biological systems distribute processing capability across information of interest. Similarly, the brain takes a long time to focus on certain decisive sounds in a noisy room with other people talking in the background. Attention mechanisms give LLM the ability to prioritize and jump to the most critical sections of the raw data it accepts.

Emergent Behaviors Arise From Simple Rules

One of the most notable comparisons between biological systems and LLMs is that the complex nature of behavior derives from simple underlying modes of nature. Everywhere in nature, this has manifested itself: following three fundamental principles (separation, alignment, and cohesion), flocking birds produce complex aerial patterns, and ant colonies form complex structures made up of entire swarms of ants walking along straightforward chemical paths.

The same can be said about LLM. Learned on the trivial problems of guessing the next word in a sequence, such models inherently acquire behavior that was not programmed. Language prediction yields learning to perform arithmetic calculations, write code, pose logical tasks, and even show some sort of creativity, not to mention that learners sometimes enjoy language prediction.

This discovery also indicates that intelligence is an outcome of adequate complexity and suitable structure, but also that an individual's ability may not need to be programmed. Its implications are broader than those of existing AI systems. They can provide insight into the evolution of biological intelligence and the next steps in the development of artificial systems, with their more advanced capabilities.

Hierarchical Processing Structures

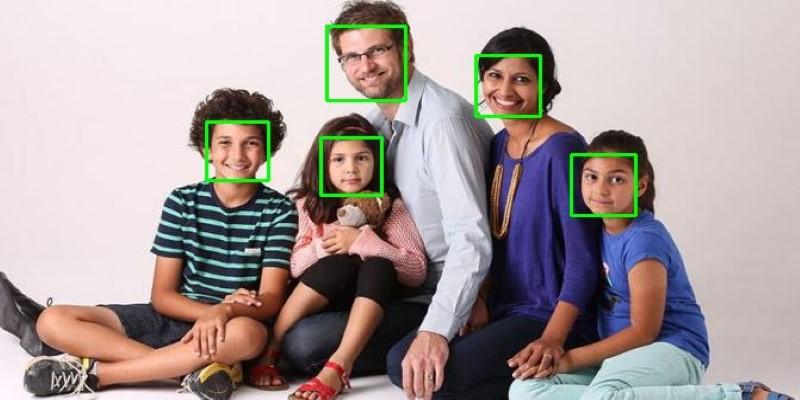

Both the brain and LLCs process information in hierarchies with multi-layered abstraction, constructing complex knowledge. A visual cortex offers a good biological demonstration: simple characteristics, such as edges and colours, are detected in the initial layers, shapes and textures, which are more complex, are detected in intermediate layers, and whole objects and scenes are detected in upper layers.

Patterns of LLMs are not significantly different. The low-level transformer layer approaches focus on lower-level language characteristics, including individual word recognition, grammar, and syntax interpretation. Middle levels form more elaborate representations, which represent semantic dependency and contextual significance. These layers combine this information with the topmost ones to produce coherent responses and to display abstract thinking.

This top-down structure is essential both in effectiveness and competence. Based on previously learned representations, these systems create representations on top of the initial input processing for every input in a manner that ensures the system continues to perform tasks of higher complexity while maintaining computational efficiency.

Research using interpretability techniques reveals that LLMs spontaneously develop specialized processing pathways for different types of information—much like how various brain regions specialize in vision, language, or motor control. These pathways emerge naturally during training, suggesting that hierarchical specialization represents a fundamental principle of intelligent systems.

Memory Systems and Information Storage

Another interesting parallel can be observed in the way biological systems and LLMs handle memory. A variety of systems interconnected enable human memory. The working memory is the place where quick information is stored, while the long-term memory is the place where knowledge and experience are stored. Associative networks unite related ideas from various spheres.

LLMs apply similar memory structures with the parameter weights and attention systems. The parameters of the model serve as long-term memory, encoding the patterns of learning and the knowledge gained from their training information. The attention mechanisms form a working memory, whereby relevant perceptions are maintained and incoming data are processed. The associative links between the process of self-attention surface, which enables the models to draw on past information when creating responses.

Both systems share specific issues: they should be able to store large amounts of information efficiently and access the relevant information on demand, while forgetting already obsolete or irrelevant information. The resulting solutions, identified in both biological and artificial systems, include selective attention, hierarchical organization, and distributed storage, which emerge regularly.

Learning and Adaptation Mechanisms

The learning mechanisms that both determine biological intelligence and the LLMs have similar characteristics. Biological neural networks reinforce the bonds that become helpful while diluting the ones that cannot lead to success. This is referred to as synaptic plasticity, which enables the brain to be flexible to new experiences and become better with time.

Gradient descent and backpropagation methods are similar concepts that differ only in their various mechanisms of reaching the same result, applied by LLM. The model is trained in such a way that it modifies the weights of connections when prediction changes occur in an attempt to reinforce connections that enhance performance and diminish the effect of the insignificant connections. This is a similar process to the biological one, where neurons work with each other and wire with each other.

There is also an example of transfer learning in both systems, i.e., the skill transfer of knowledge acquired in clearly defined areas into other new contexts. The biological intelligence is good at this: a person who learns how to throw a ball can perform other hand-eye coordination tasks; knowing how to speak one language allows one to understand others. The same can be said of LLLMs, which transfer the resulting grammatical information inter-linguistically and inter-problem-domain logic as well.

Future Implications and Applications

Understanding these biological parallels opens exciting possibilities for future AI development. By studying how biological systems achieve efficiency, robustness, and adaptability, researchers can design better artificial systems that inherit these beneficial properties.

Neuroplasticity research suggests ways to make LLMs more adaptable, allowing them to continue learning and updating their capabilities after initial training. Studies of biological attention mechanisms inspire more efficient architectures that can process information with less computational overhead. Research into how the brain handles uncertainty and ambiguity provides frameworks for building more robust AI systems that can operate reliably in unpredictable environments.

The convergence also raises important questions about consciousness, creativity, and the nature of intelligence itself. As LLMs develop increasingly sophisticated emergent capabilities, they provide unique tools for testing theories about how complex behaviors arise from simple components.

Final Thoughts

Intelligence is found to obey generic principles, whether in biology or AI, such as the case of LLMs. Both of them are anchored on priority processing, distributed memory, the mechanisms of attention, as well as the emergent abilities to tackle captivating problems. The analysis of LLMs helps to understand the aspects of biological thinking, and biology influences the development of AI. Such convergence offers natural-inspired AI systems and technologies that can benefit biological studies and advance both science and its practical applications.