The landscape of machine learning and AI in 2025 is more practical, modular, and accessible. The focus has shifted from just building models to managing them efficiently—from training infrastructure to deployment-ready solutions. Whether you're handling text, vision, or multi-modal data, the right tool now shapes the speed and scale of your outcomes. What once needed a massive setup can now be done with open-source libraries, low-code interfaces, or hosted APIs. This guide covers 18 tools that stand out in machine learning, NLP, and deep learning this year.

Top 18 Machine Learning, NLP, and Deep Learning Tools for 2025

TensorFlow

Backed by Google, TensorFlow stays central to production workflows. TensorFlow Lite and TensorFlow Extended (TFX) have become easier to use, making deployment to mobile and cloud environments more efficient. Support for GPUs and TPUs remains strong. Developers benefit from structured APIs, streamlined data validation, and monitoring tools that now come bundled.

PyTorch

Known for flexibility, PyTorch continues to be favored in research and applied ML. Version 2.2 boosts speed through improved graph mode and distributed training support. Tools like TorchServe and PyTorch Lightning simplify model serving and training, making it a good choice for both prototyping and scaling.

Hugging Face Transformers

This library powers many NLP and multi-modal applications. It now covers audio, vision, and reinforcement learning. Hugging Face Hub and Inference Endpoints offer fast deployment options. Integration with PyTorch and TensorFlow helps teams switch between frameworks without rewriting code.

Scikit-learn

Scikit-learn remains reliable for structured data and classic ML models. It’s now easier to integrate with GPU-accelerated libraries and pipeline tools. It's widely used in small to medium-sized projects where deep learning isn’t necessary. The learning curve is still one of the lowest among ML libraries.

OpenAI API

The API provides quick access to GPT-4o and other large models. It handles tasks like summarization, reasoning, classification, and image generation. New features like custom instructions and improved function calling offer more control without needing to train your own models. It’s a good fit for rapid development.

spaCy

SpaCy is a compact tool for NLP tasks such as parsing, NER, and sentence segmentation. Version 4 adds support for transformer pipelines and multilingual models. It’s ideal for projects that need fast processing, clean APIs, and a smaller footprint than full-scale LLMs.

Keras

Built into TensorFlow, Keras offers a clean syntax and supports advanced features like mixed precision and distributed training. It suits both beginners and professionals building production-ready models. Its plug-and-play nature makes it effective for quick model development.

LangChain

LangChain has become a go-to for building LLM-powered applications. It connects language models with memory, tools, and data sources. In 2025, it supports async execution, modular chains, and tight integration with vector databases—key for RAG pipelines and chat-based tools.

FastAI

FastAI is known for making deep learning more accessible. It provides high-level APIs for text, image, tabular, and time-series data. The 2025 update supports transformers and vision models out of the box. It's great for teams who want fast iteration without giving up performance.

AllenNLP

AllenNLP is built for interpretability and clear experimentation. It includes out-of-the-box support for transformers, attention mechanisms, and benchmarking datasets. Researchers use it to prototype quickly with better visibility into what’s happening inside their models.

NVIDIA NeMo

NeMo is designed for building speech and language models at scale. It offers optimized training on NVIDIA GPUs and now supports real-time transcription, synthesis, and classification. Its integration with Triton Inference Server makes it strong in low-latency environments.

ONNX

ONNX makes it easy to export models across frameworks. It's widely used to deploy models trained in PyTorch or TensorFlow to edge or production environments. The 2025 runtime supports quantized models, faster inference, and lower memory usage—key for mobile and embedded devices.

JAX

JAX is gaining popularity for mathematical optimization, physics simulations, and ML research. Its combination of NumPy-style code and automatic differentiation is powerful. With better documentation and easier setup, it's more approachable than before, especially for researchers working on custom models.

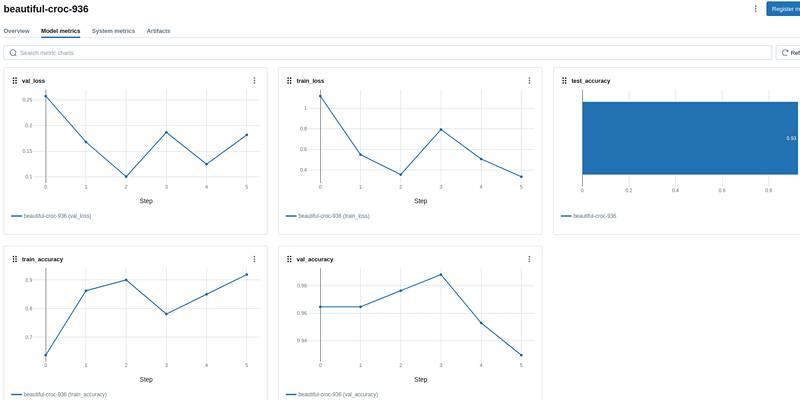

MLflow

MLflow helps track experiments, log metrics, and manage model lifecycles. It works with most major ML libraries and cloud environments. New updates bring better UI, improved access controls, and smoother integration with deployment platforms like SageMaker and Azure ML.

Weights & Biases

W&B is used to visualize experiments, compare results, and monitor performance. In 2025, it supports real-time collaboration, dataset versioning, and reports for sharing findings. It integrates with Kubernetes and cloud storage, making it scalable for team environments.

T5X

T5X is Google’s framework for training large language models. It enables faster experimentation with text-to-text transformers, especially in multi-task and multilingual settings. While more niche, it's effective for building foundation models in research-heavy environments.

Cohere

Cohere provides hosted APIs for embeddings, text classification, and generation. Their models now support retrieval-augmented generation and lightweight fine-tuning. It appeals to teams wanting reliable NLP tools with more transparency than closed platforms.

Google Vertex AI

Vertex AI brings together AutoML, pipelines, and managed notebooks under one platform. In 2025, it will integrate even better with Google Cloud services, allowing smooth data ingestion, model training, and deployment. It's built for teams with heavy workloads or multi-step workflows.

Final Thoughts

In 2025, tools for machine learning and NLP are more diverse, better connected, and easier to use. Whether you need lightweight libraries for quick experimentation or full-scale platforms for deployment, the choices are more refined than ever. You don’t need to choose just one—many of these tools complement each other. As workflows become more modular and data more dynamic, using the right combination of tools can help you move from prototype to production without getting stuck in engineering overhead. These tools now offer smoother integration, improved documentation, lower latency, and more flexibility across use cases, from research to enterprise-scale applications.