Kolmogorov-Arnold Networks (KAN) represent an interesting convergence of the fields of mathematics and neural networks. KAN offers an approach to approximating multivariate functions by simpler univariate functions. This guide discusses the mathematical principles, importance, and historical examples of KAN, and provides insight into KAN's role in the future of machine learning and computational performance.

The Foundation: Kolmogorov-Arnold Representation Theorem

The Kolmogorov-Arnold Representation Theorem, also known as the Stone-Weierstrass theorem for multivariate functions, is a fundamental result in functional analysis and approximation theory. It was first introduced by mathematicians Andrey Kolmogorov and Vladimir Arnold in the 1950s.

This theorem states that any continuous function of several variables can be approximated by simpler univariate functions. In other words, given a multivariate function f(x1,x2,...,xn), there exists a sequence of univariate functions gk such that:

f(x1,x2,...,xn) ≈ ∑(gk(xk))

This not only provides a useful tool for approximating complex functions, but also has important implications in fields such as signal processing, data analysis, and machine learning.

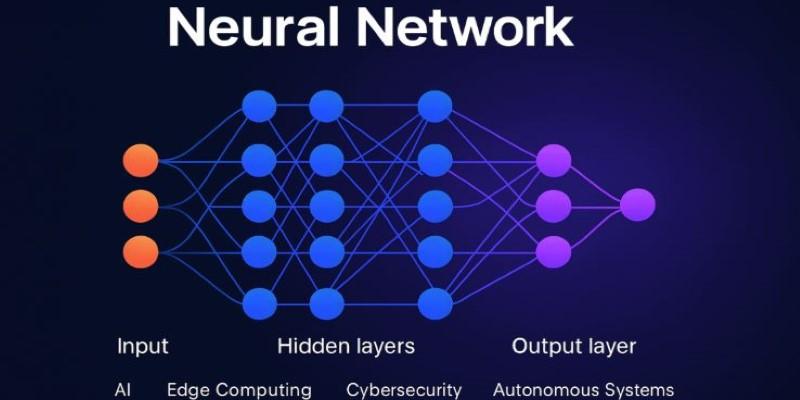

Translating Theory into Neural Network Architecture

The theory of Kolmogorov-Arnold Networks translates the theory into a working neural network model. In contrast to traditional networks, where multivariate interactions may be complex enough to be buried in the hidden layers with no way to interpret them, KANs explicitly implement computations in terms of univariate transformations. There are essential architectural characteristics that encompass:

- Input Layer Transformation: On each input dimension, every input variable is processed by a corresponding univariate transformation specification ϕi /phi i.

- Hidden Layer Combination: The transformed inputs are combined linearly and fed through more univariate functions ψjψjψj ψj in the hidden layer, which is an expression of summation in the theorem.

- Output Generation: This is defined as the sum of the network's transformed components, whose theoretical approximation of the original multivariate function is guaranteed.

This architecture guarantees that the network is both solid and mathematically sound, contrasting it with more heuristic neural network design methods.

Mathematical Insights Behind KANs

Strengths of KANs: One of the critical mathematical principles of the Kolmogorov-Arnold theorem leads to several mathematical principles:

- Dimensional Reduction Decomposition: Functions of high dimensions are expressed as compositions of one-dimensional transformations to reduce computational complexity.

- Assured Existence of Univariate Mappings: Every input dimension is represented by a univariate function, and networks can concentrate on learning less interpretable and simpler functions, instead of trying to model multivariate interactions directly.

- Formation of Structured Output: When these transformations are combined using additive functions, the network can approximate any continuous target with theoretical accuracy.

This explicit mathematical background provides predictive power and insight into the contribution of inputs to outputs, thereby increasing interpretability and reliability.

Advantages of Kolmogorov-Arnold Networks

KANs have several substantial benefits compared to standard neural network designs:

- Theoretical Guarantees: Unlike empirical networks, KANs offer a mathematically proven model for approximating any continuous function, which is vital for multi-stakeholder modeling.

- Interpretability: The association between neurons and univariate transformations enables the practitioner to trace the impact of inputs on outputs.

- Computational Efficiency: KANs can be trained more efficiently due to univariate components generated by reducing multivariate complexity, especially when the input variables exhibit separable patterns.

- Overfitting Resistance: The structured decomposition provides causal constraints that help guard against a network picking up spurious patterns in the data.

These benefits render KANs particularly appropriate in areas where accuracy, transparency, and reliability are the most important.

Practical Applications of KANs

Kolmogorov-Arnold Networks are highly versatile and can be applied across multiple domains:

- Finance and Economics: KANs excel in modeling complex financial systems, risk assessment, and portfolio optimization, capturing nonlinear relationships among diverse economic indicators.

- Engineering Simulations: From aerodynamics to structural mechanics, KANs approximate complex system behaviors, allowing accurate predictions with fewer computational resources.

- Healthcare Analytics: Multivariate patient data can be analyzed to generate diagnostic predictions while retaining the interpretability of input contributions.

- Environmental Modeling: Climate simulations and ecological predictions benefit from KANs' ability to model high-dimensional phenomena with theoretically grounded approximations.

- Scientific Research: Experimental data analysis often involves multivariate functional relationships, making KANs an ideal tool for accurate modeling and hypothesis testing.

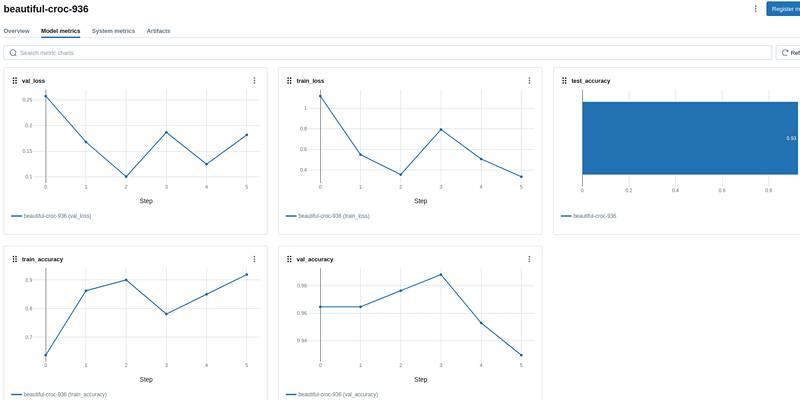

Training Kolmogorov-Arnold Networks

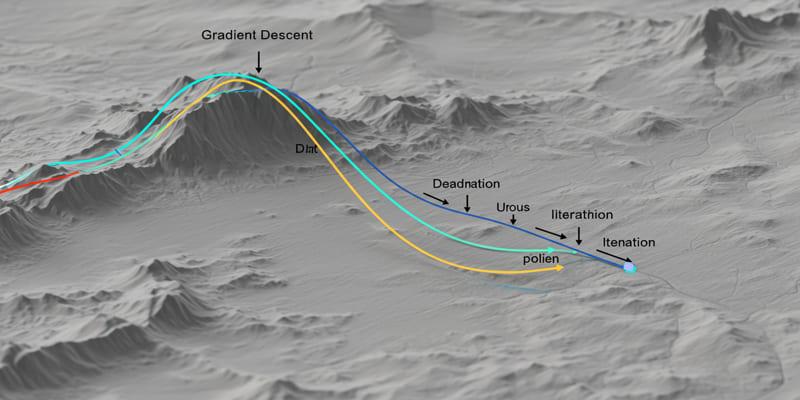

Training KANs requires optimizing both the univariate transformation functions and their linear combinations:

- Parameter Initialization: Functions ϕi\phi_iϕi and ψj\psi_jψj are initialized using standard methods or based on prior domain knowledge.

- Optimization Algorithms: Gradient descent and other optimization strategies are applied, ensuring continuity and smoothness constraints inherent to the theorem are preserved.

- Regularization and Constraints: Smoothness penalties or constraints may be added to ensure theoretical alignment with the Kolmogorov-Arnold framework.

- Validation and Analysis: Interpretable transformations allow direct inspection of network behavior, facilitating both model validation and explanation.

Unlike generic neural networks, KANs leverage mathematical guarantees to guide training, potentially leading to faster convergence and more stable learning.

KANs in Context: Comparison with Other Neural Networks

- Feedforward Neural Networks: Are flexible and do not have theoretical guarantees or interpretability.

- Radial Basis Function Networks: focus on local approximation; KANs focus on global univariate decomposition.

- Convolutional Networks: Structured data (images) with no explicit multivariate decomposition.

- Recurrent Neural Networks: Great with time series, and are not capable of giving an approximation framework of general multivariate functions like KANs.

KANs occupy a special place in the field of neural networks, offering both explanatory power and approximate performance.

Future Directions

Kolmogorov-Arnold Networks are both exciting to research and exciting to be used:

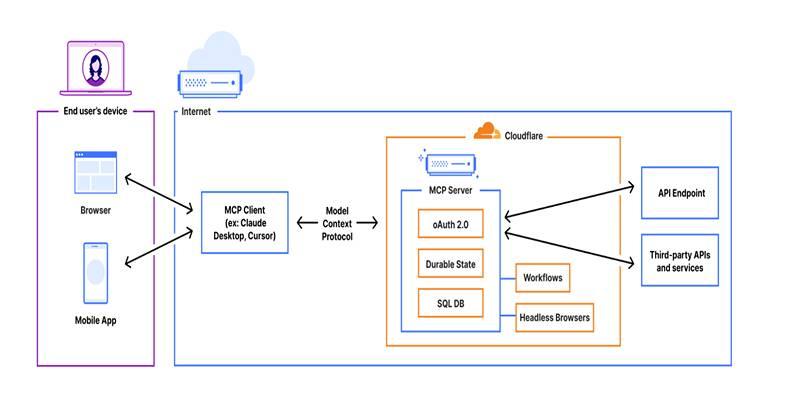

- Hybrid Architectures: They can be easier to integrate with probabilistic models, or deep learning frameworks.

- Dimensionality Reduction Techniques: Scalability. KANs can be used together with preprocessing techniques when the input is extremely high-dimensional.

- Hardware Acceleration: KANs can be optimized to run on current computational architectures, enabling faster training on large datasets.

- Generalisation to Discrete Functions: Studies can be done to apply KAN principles to non-continuous functions.

The continued development of KANs indicates their retention in theoretical and practical computation.

Conclusion

The example of Kolmogorov-Arnold Networks represents the harmonization between traditional mathematics and the design of neural networks nowadays. Based on the Kolmogorov-Arnold representation, KANs provide a theory for approximating multivariate functions. The well-structured design, use of univariate transformations, interpretability, and the diverse applications possible with KANs make them an attractive alternative to traditional networks across numerous application domains.