Training a language model from scratch means building every key part yourself. You collect a clean corpus, turn text into tokens, define an architecture, run a stable training loop, measure quality, and ship a package that others can run. Rust suits this journey because it gives tight control of memory, strong safety checks, and predictable performance.

You can squeeze more from each node, trace bottlenecks with confidence, and avoid surprises in production. We will keep the focus on process, not novelty. The target is a model that learns steadily, is reproducible, and is straightforward to operate.

Data Foundations That Keep Training Stable

Good training starts with text that is consistent, licensed, and well-formed. Build a pipeline that fetches sources with clear rights, strips boilerplate, normalizes whitespace, and standardizes Unicode.

Deduplicate aggressively since repeated chunks can skew gradients and inflate scores without real learning. Split by domain so you can track how each source affects loss. Keep a small, frozen validation set from day one, and never let it leak into training. Store hashes and manifest files so every run can be traced back to the exact data snapshot.

Tokenization Choices Without Regret

Tokens are your vocabulary. Pick a scheme that balances vocabulary size with sequence length. Byte Pair Encoding and unigram models are common because they handle rare words and emojis while keeping coverage broad. Train the tokenizer on your actual corpus rather than a generic dump, since domain terms will matter.

Decide early whether you will lowercase, keep case, or preserve accents. These choices shape sequence length and memory use. Keep the tokenizer versioned alongside the dataset; a mismatch here can ruin reproducibility.

Model Architecture In Rust Without Guesswork

Choose an architecture that your hardware can feed. A decoder-only transformer is often the simplest path. Depth and width set both capacity and cost, so size the model to match data volume and target latency.

Add rotary or learned positional signals, stable normalization, and dropout tuned to your token count. Keep weight initialization consistent across runs. Favor components with strong references and wide adoption to avoid faint edge cases. Document every hyperparameter and seed so the training script and the paper trail say the same thing.

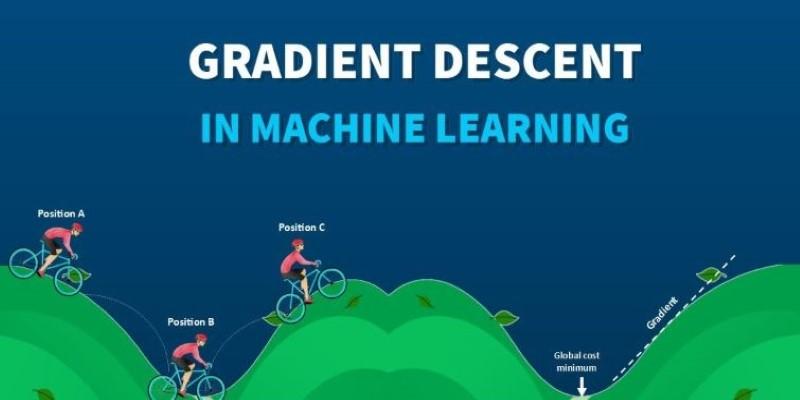

Training Loop And Optimization That Actually Runs

A smooth loop depends on clean batching, careful shuffling, and steady learning rate schedules. Warmup prevents early divergence, while cosine or linear decay helps later steps settle. Gradient clipping protects against spikes.

Mixed precision training can speed things up, but verify numerics at the start with short smoke tests. Track tokens per second, step time, and loss by data slice. If throughput drops, check for data loader stalls or tiny batches that leave accelerators idle.

Memory, Precision, And Throughput Tuning

Your aim is to keep accelerators busy while staying within memory limits. Sequence length, batch size, and activation checkpointing are the main levers. Sequence is expensive, so pick a limit that fits real prompts rather than chasing a flashy number. Use gradient accumulation to simulate larger batches when memory is tight.

Quantization aware training can trim memory during inference later, but keep training weights in a format that stays stable across toolchains. Profile end to end. If kernels stall, look for host-to-device copies, unpinned buffers, or uneven shard sizes that leave devices out of sync.

Evaluation You Can Explain

Perplexity is handy, but people want something they can read. Build a small, frozen evaluation suite that reflects your target language and style. Keep it text based and documented. Report loss by domain slice so shifts show up early. Track calibration with simple checks that compare predicted token ranks against actual outcomes.

Add regression tests for tokenizer quirks and newline handling, since small parsing mistakes can hide for weeks. When a change helps one metric and hurts another, write down the tradeoff so the next run does not repeat the same loop.

Packaging And Deployment In The Rust Ecosystem

Once the model trains, you need a serving path that is boring and fast. Package weights with a clear manifest, tokenizer files, and a small README that lists shapes and precision. A Rust-based runtime can deliver low latency with predictable memory behavior.

Expose a simple HTTP or gRPC interface and add structured logs. Cold start matters, so cache tokenizer state and warm attention caches where it helps. Keep a compatibility table that maps model versions to runtime versions so a rollback is safe at any hour.

Security, Compliance, And Licensing

Training from scratch does not exempt you from risk. Track licenses for every data source and record proofs. Strip personal data during ingestion and honor removals with a simple, tested process. Add input and output filters around the runtime to catch unsafe or banned content. Keep audit trails that tie a response to a model build, a tokenizer version, and a data manifest. If a partner asks for a review, you should be able to produce this chain without a scramble.

Team Workflow, Reproducibility, And Observability

Treat training as a product pipeline. Pin tool versions, capture seeds, and store config files next to checkpoints. Every run gets a tag, a short note, and a link to metrics. Dashboards should show tokens per second, step time, loss, memory headroom, and failure rates. Alert on drift in throughput or loss that stalls for too long.

A tiny set of smoke tests should run before any full training job: tokenizer round trip, a single batch through the model, and a one-step update that changes loss in the expected direction. These habits save days, not minutes.

Conclusion

Training an LLM from scratch in Rust is about control, clarity, and steady progress. Clean data flows into a tokenizer that fits your corpus. An architecture sized to your hardware learns with a stable loop that you can explain and repeat.

With careful choices and disciplined notes, you end up with a model that not only learns well but also ships without drama. That is the goal: repeatable training, readable evaluation, and a runtime you trust to handle real traffic.